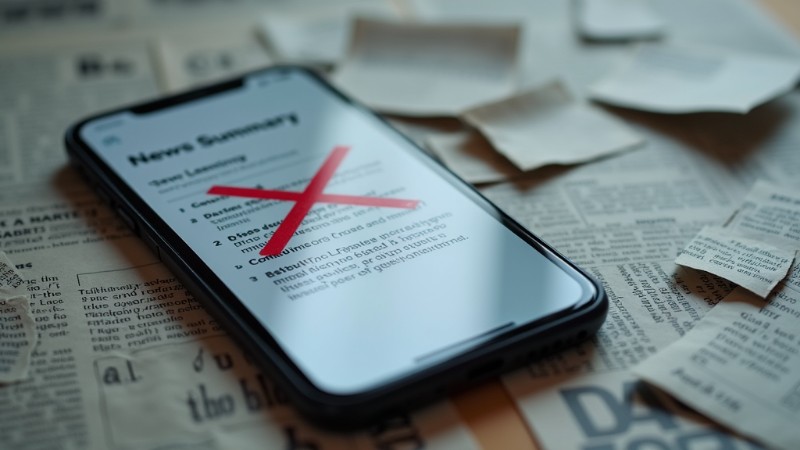

Apple’s AI news alert system has come under scrutiny after incorrectly reporting the outcome of a major sporting event. The feature, part of Apple Intelligence, mistakenly announced that 16-year-old darts player Luke Littler had won the PDC World Darts Championship, even though the final match had not yet occurred. This incident has sparked widespread criticism, raising questions about the accuracy of AI-generated news summaries.

The error was initially reported on January 2, 2025, just one day before the finals, after Apple’s AI issued a push notification claiming Littler had secured the championship title. The alert was based on a BBC report detailing Littler’s impressive semi-final victory, but the AI misinterpreted the information. Littler’s final match was scheduled for the following day, making the premature announcement not only inaccurate but also misleading for users relying on the feature for real-time updates.

The BBC, whose article was the source of the misinterpretation, has since called on Apple to address the issue, emphasizing the importance of accurate news dissemination. This incident is part of a growing list of errors attributed to Apple’s AI news.

Key Takeaways

Apple’s AI news alert system has been criticized for inaccurately reporting the outcome of a major sporting event, raising concerns over the reliability of AI-generated news summaries.

- Apple’s AI feature mistakenly announced that Luke Littler had won the PDC World Darts Championship before the final match occurred, highlighting systemic issues with how Apple’s AI processes and summarizes breaking news.

- The repeated errors have fueled concerns about the reliability of AI-powered news alerts, undermining trust in Apple’s platform and potentially leading to misinformation spreading more widely.

- Experts suggest that the errors may stem from the AI’s reliance on incomplete or ambiguous data, emphasizing the need for robust safeguards in AI design and implementation.

Repeated errors

This isn’t the first time Apple’s AI feature has faced criticism for mishandling news alerts. In another instance, the system falsely claimed that tennis star Rafael Nadal had come out as gay. The incorrect notification, like the one involving Littler, was quickly debunked, but it highlighted systemic issues with how Apple’s AI processes and summarizes breaking news.

Breaking: Apple Intelligence alleged that “Rafael Nadal [came] out as gay” – not because he had, but because an LLM confabulated. pic.twitter.com/8IxWbK5nNu

— Gary Marcus (@GaryMarcus) January 5, 2025

The repeated mistakes have fueled concerns about the reliability of AI-powered news alerts. Critics argue that these errors undermine trust in Apple’s platform and could lead to misinformation spreading more widely. These inaccuracies are not just small oversights; they have the potential to damage reputations and mislead audiences.

The technology behind the problem

Apple Intelligence uses AI algorithms to generate concise news summaries and notifications. While the feature aims to make information more accessible to users, the system appears to struggle with distinguishing between finalized outcomes and ongoing events. This flaw is particularly problematic in the context of live or developing stories.

Experts suggest that the errors may stem from the AI’s reliance on incomplete or ambiguous data. In Littler’s case, the AI likely interpreted the celebratory tone of the semi-final report as confirmation of a championship win. Although Littler won in the end and indeed became the youngest-ever PDC World Darts Champion, the premature notification misled many users and highlighted the system’s inability to handle nuanced updates in real-time.

Globally, millions of users rely on AI-driven notifications for quick and concise updates on news, sports, and entertainment. Apple’s AI notification system is integrated into its ecosystem of over a billion active devices, making it one of the most widely used platforms for real-time updates.

As more users depend on automated systems for news consumption, the stakes for accuracy and reliability grow higher. Any failure not only erodes trust but also underscores the critical need for robust safeguards in AI design and implementation.

Public and industry reaction

The backlash has been widespread. Social media users criticized Apple for its lack of quality control, questioning the accuracy of its AI systems. Media outlets labeled the issue a serious failure, warning it could erode user confidence in AI technologies. Many emphasized the need for human oversight, suggesting automated systems require regular checks by experienced editors.

Apple has yet to provide a detailed response, but analysts are urging immediate action, including stricter verification protocols and increased human oversight. While other tech companies have faced similar issues, Apple’s reputation as a leader in innovation places it under greater scrutiny. Critics argue it should set the standard for AI news accuracy.

Critics argue that as a company renowned for its innovation and attention to detail, Apple should be leading the way in ensuring AI news accuracy.